Object detection: An overview with code examples

Learn what object detection is and how it has evolved. Take a look at some of the most common and practical use cases. Code included.

Gonzalo Chiarlone

Object detection has been a trendy topic in computer vision in the last 10 years. This shouldn't be a surprise to anybody, taking into account that it allows computers to watch the world with their own eyes (or cameras, whatever).

In this article, we will discuss object detection and how it can be used and mention some of the most popular techniques: from classic to state-of-art. Finally, we also include code examples of some of the most popular models to try yourself.

What do we mean when we say "Object detection"?

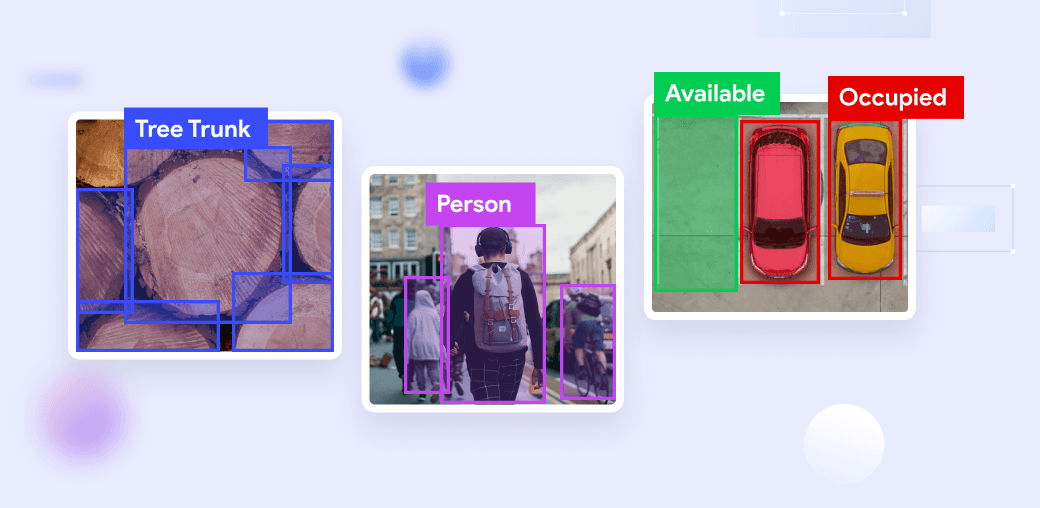

Object detection is the computer vision task that deals with the localization and, most of the time, classification of specific objects in images. This can be done by looking for a single object (left figure), multiple objects of the same class (middle figure) or even multiple objects of multiple classes (right figure).

Object Detection Use cases

Once you familiarize yourself with the tool, it's only up to your imagination to set use cases. We will mention some of the most common ones you can find. Consider that object detection could be applied not only in a given set of images but also to a live video, applying the algorithm frame by frame.

Tracking

One of the most common uses is video frame-by-frame tracking. This consists of applying an object detection algorithm to each video frame. This could be done for different purposes, like following a football player on the field, tracking a customer in a store, or ensuring nobody enters a particular zone for security purposes.

![]()

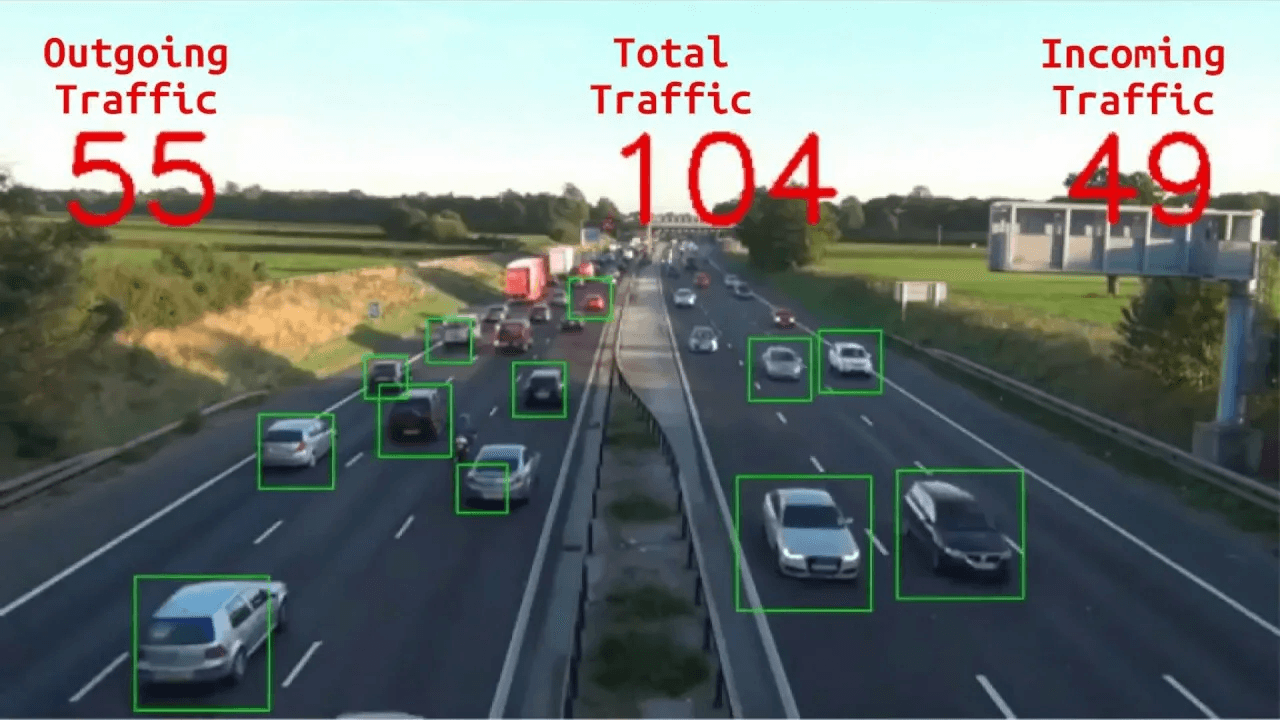

Object counting

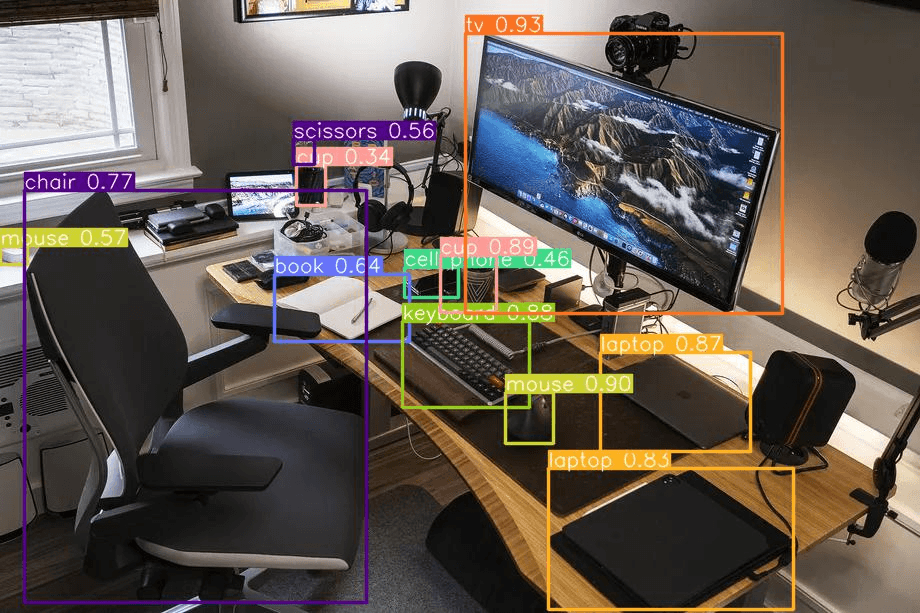

Another everyday use is counting objects in an image (or a video frame). As in many other examples, this is often faster and more reliable when done by machines rather than humans. An example of this could be counting the number of vehicles passing by like in the following figure.

Feature detection

Sometimes, you may want to detect objects with specific characteristics (color, size, and position). Similar to the previous example, but only looking for red cars.

Anomaly detection

Similarly, anomaly detection consists of detecting the presence of an object you don't want in an image. Sometimes we also look for the absence of the object itself. For example, you can have a camera pointing to plants and detecting if any of them is dry or has odd leaves purely based on what the camera is seeing.

Medical images

Even though it is a sub-case of anomaly detection, this application has become an independent field. The idea is to detect the presence of different objects (or living beings) in a medical image (it could be a resonance, x-rays, tomography, radiography, etc.), supporting the doctors on where to look.

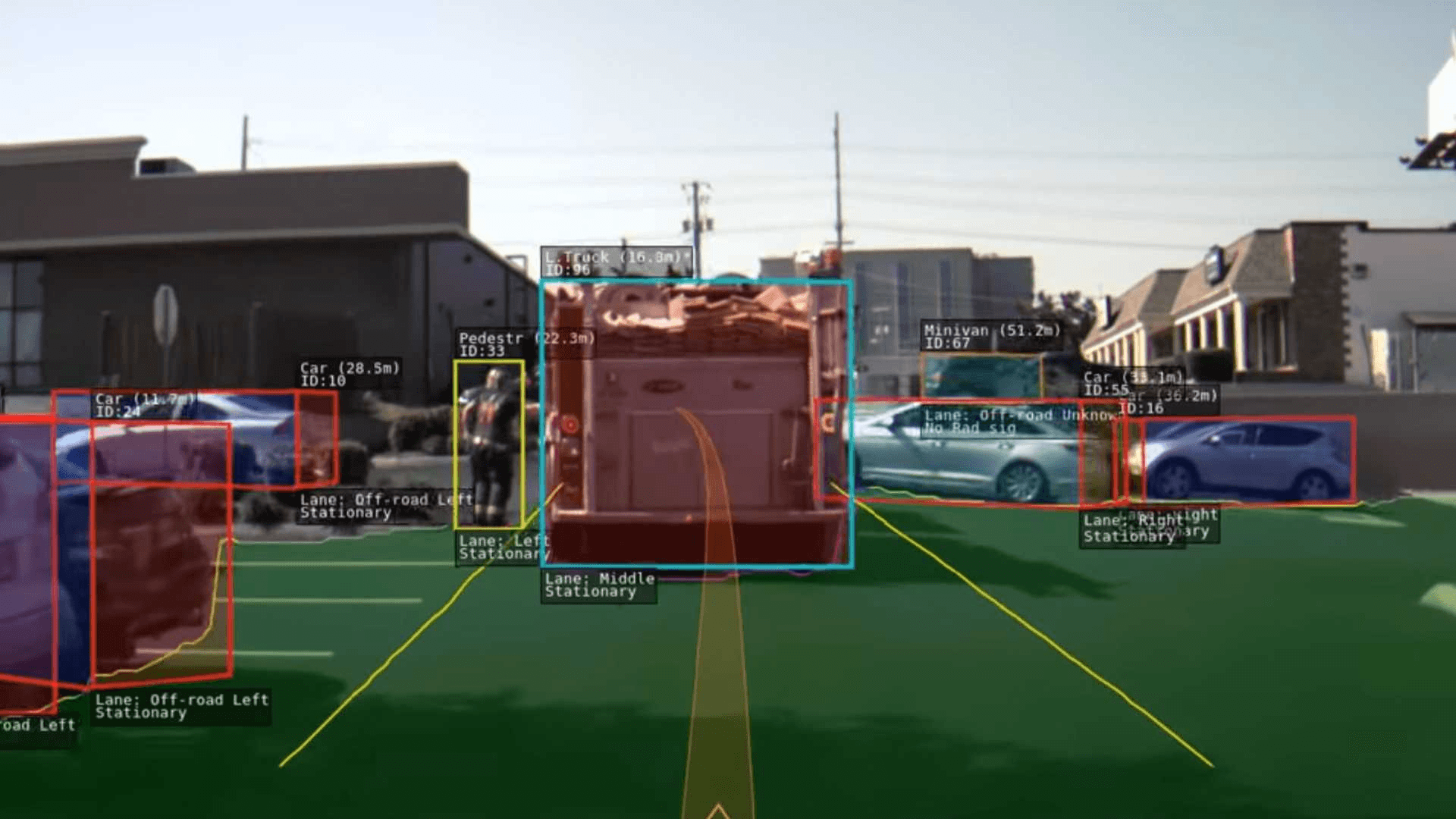

Robots

Just like all animals, robots also need to "see" the world in their own way. But sometimes, it's not enough. They have to know what they are looking at. One well-known example of this is autonomous cars, which have to distinguish between road signs, cars, pedestrians, traffic lights, etc. to decide how to respond.

Object detection evolution

Computer vision has made enormous progress in the last couple of decades, and object detection is not the exception. Just to understand on what basis it stands today, it may be helpful to see some of the most important changes in its brief history. Mainly it can be divided into two different "eras": Before and After Deep Learning (BDL and ADL).

Before using deep learning on object detection, the methods were based on hand-crafted features. These features come from various algorithms with information that can be obtained directly from the image. The methods are sometimes labeled as Traditional object detectors. There are 3 representative examples of this era:

- Haar-like features: These were implemented in object-detection research by Viola and Jones. They detected faces based on 3 basic types: edge, line, and four-rectangle features. reference

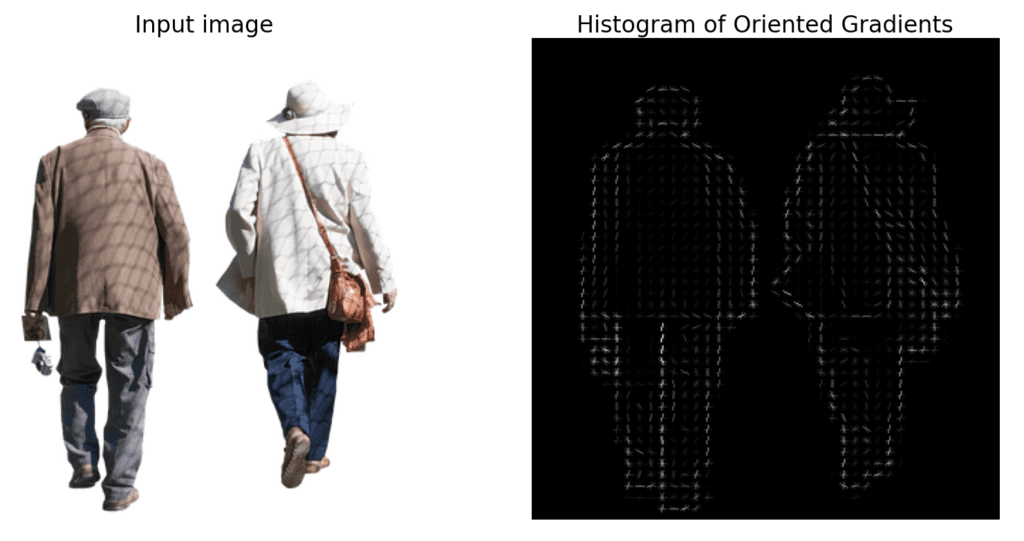

- HOG Detector: Histograms of Oriented Gradients gained popularity after the Conference on CVPR (Computer Vision and Pattern Recognition) held in 2005. This method counts how many times a gradient orientation appears in a certain portion of an image. reference

- DPM: Deformable Parts Model consists of a group of templates arranged in a deformable configuration. It has one global template and many part templates. reference

Progress got stuck around 2010 until AlexNet came up in 2012, starting the new DL era. This project implemented CNN, combined with data augmentation, and achieved the lowest error rates to that date.

CNNs had been applied to handwritten recognition, but there were computational limitations and not large enough databases to scale to object detection in a wider range of images. AlexNet tackled this problem. reference

Later on, all the different models proposed in the last decade can be sorted out into 2 main categories:

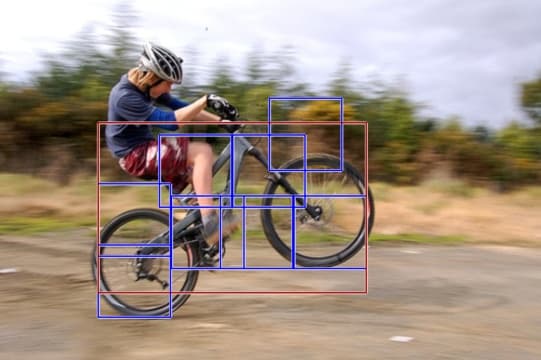

- Two-stage methods: Deriving from R-CNN, the method consists of a first stage where a model is used to localize possible object regions. Then its results are used as input for a second model which classifies the objects. Most of them build on previous methods and research, focusing on a specific drawback that the previous methods had. Namely SPP-Net, Fast R-CNN, Faster R-CNN, FPN, Mask R-CNN, and Cascade R-CNN. reference

- One-stage methods: This method directly predicts an object's bounding boxes for an image. As an upgrade from the previous two-stage method we discussed, this is faster and simpler but sometimes not as flexible. Examples of this are SSD and YOLO, which we will add a little demo for you to try on your own. reference

Code examples

Now it's time to try a real example of object detection. For this example, we will use two well-known models: YOLOv5 (you-only-look-one) and Detic.

Example 1: YOLOv5

The first example will be using YOLOv5. This is one of the most popular algorithms nowadays and the one to go to when looking for a real-time object detector, mainly because of its incredible speed and accuracy.

Let's start by installing some dependencies:

pip install -qr https://raw.githubusercontent.com/ultralytics/yolov5/master/requirements.txtThen, we have to load the image in which we will run the model:

from PIL import Image

import requests

from io import BytesIO

# Image url

image_url = "https://ultralytics.com/images/zidane.jpg"

# Load Image

response = requests.get(image_url)

image = Image.open(BytesIO(response.content))

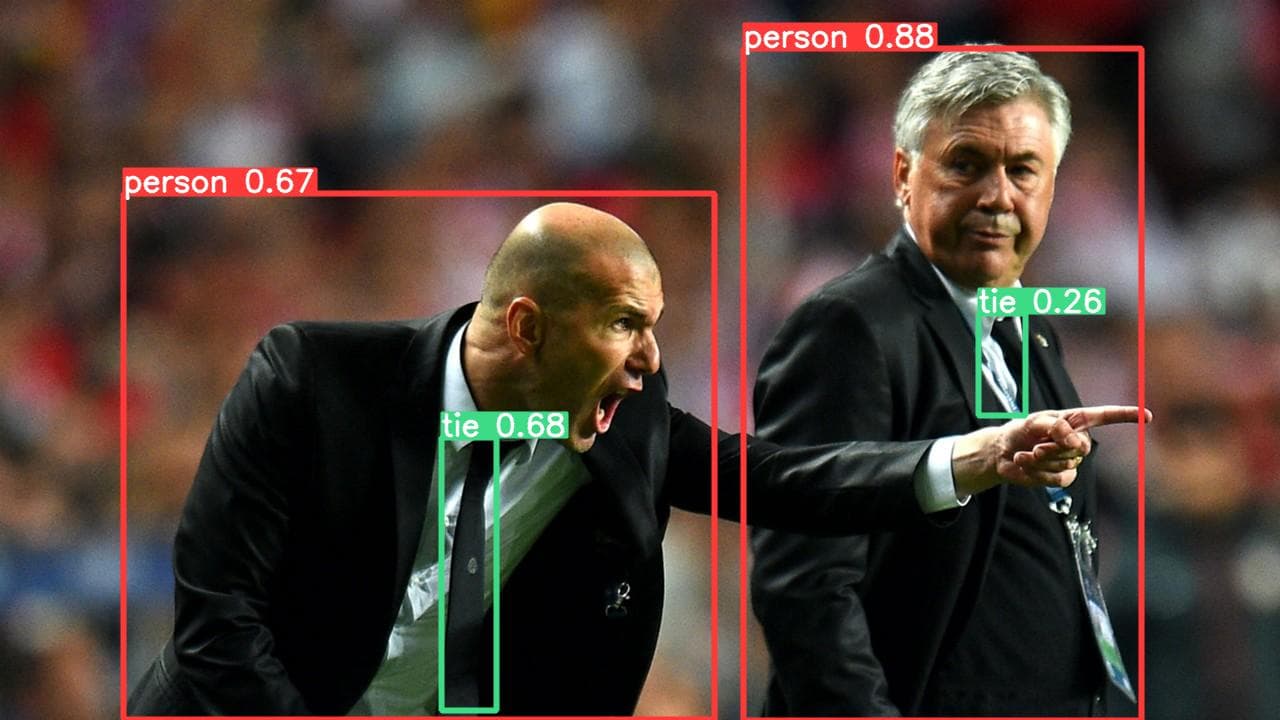

image.show()If everything goes right, the following image should open:

Now we have to load the model with PyTorch:

import torch

# Model

model = torch.hub.load("ultralytics/yolov5", "yolov5s", pretrained=True)Notice that we set the pretrained parameter True. This tells PyTorch that we want to use the weights obtained from training in the 1000-class ImageNet dataset (for more details, go to YOLOv5). These weights can be finetuned for a particular purpose if trained in a dataset of your choice (that's one of the many things we here do at Pento).

After loading the image and the model, we are now able to run the model on this image or, as we like to call it, make an inference:

# Inference

results = model(image)

# Results

results.print()

# Show detection

results.show()If you followed every step right, probably two things should happen:

First, something like this should appear in the console:

image 1/1: 720x1280 2 persons, 2 ties

Speed: 3.7ms pre-process, 119.4ms inference, 1.1ms NMS per image at shape (1, 3, 384, 640)What does this tell us? For the image in batch (1/1), with a size of 720x1280, it detected 2 objects classified as "person" and 2 classified as "ties." The rest is information about the speed of the inference.

Then, the following image should pop up:

Here we can see the bounding boxes in each object, each with its respective tag and confidence the prediction is correct.

Example 2: Detic

Our final example will use a newer detector called Detic (Detector with Image Classes). It can be considered a two-stage detector, which focuses on expanding the vocabulary. Primarily, it introduces Natural Language Processing to assist in the classification. But don't worry. All you need to know is that you can include more classes, resulting in images full of labels.

First, let's set up our working environment:

pip install torch torchvision torchaudio

git clone git@github.com:facebookresearch/detectron2.git

cd detectron2

pip install -e .

cd ..

git clone https://github.com/facebookresearch/Detic.git --recurse-submodules

cd Detic

pip install -r requirements.txtNow, if you only want to run Detic on a single image from the terminal, you can do it by running the following command:

python demo.py --config-file configs/Detic_LCOCOI21k_CLIP_SwinB_896b32_4x_ft4x_max-size.yaml --cpu --input ../zidane.jpg --output out.jpg --vocabulary lvis --opts MODEL.WEIGHTS models/Detic_LCOCOI21k_CLIP_SwinB_896b32_4x_ft4x_max-size.pthHowever, if you want to create a programmatic file that you can run as many times as you like and customize, you can achieve it with the following code:

NOTE: This file must be inside the folder "Detic"

We start importing some dependencies and declaring some constants:

import sys

import torch

from detectron2.config import get_cfg

from detectron2.data import MetadataCatalog

from detectron2.data.detection_utils import read_image

from detectron2.engine import DefaultPredictor

from detectron2.data import MetadataCatalog

from detectron2.utils.visualizer import ColorMode, Visualizer

sys.path.insert(0, "third_party/CenterNet2/")

from centernet.config import add_centernet_config

from detic.config import add_detic_config

from detic.modeling.utils import reset_cls_test

BUILDIN_CLASSIFIER = {

"lvis": "datasets/metadata/lvis_v1_clip_a+cname.npy",

"objects365": "datasets/metadata/o365_clip_a+cnamefix.npy",

"openimages": "datasets/metadata/oid_clip_a+cname.npy",

"coco": "datasets/metadata/coco_clip_a+cname.npy",

}

BUILDIN_METADATA_PATH = {

"lvis": "lvis_v1_val",

"objects365": "objects365_v2_val",

"openimages": "oid_val_expanded",

"coco": "coco_2017_val",

}Then we have to choose the configurations of our model. Here there are two important things:

- the model device, in this case, we are going to run the model in the CPU.

- and the model weights, similar to the torch.hub.load from the first example, but now we are selecting the weights from a local file.

# Load model's configuration

cfg = get_cfg()

add_centernet_config(cfg)

add_detic_config(cfg)

cfg.merge_from_file("configs/Detic_LCOCOI21k_CLIP_SwinB_896b32_4x_ft4x_max-size.yaml")

# Configure prediction settings

cfg.MODEL.RETINANET.SCORE_THRESH_TEST = 0.5

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.5

cfg.MODEL.PANOPTIC_FPN.COMBINE.INSTANCES_CONFIDENCE_THRESH = 0.5

cfg.MODEL.ROI_BOX_HEAD.ZEROSHOT_WEIGHT_PATH = "rand"

# Where model weights are located and how to load them

cfg.MODEL.DEVICE = "cpu"

cfg.MODEL.WEIGHTS = "models/Detic_LCOCOI21k_CLIP_SwinB_896b32_4x_ft4x_max-size.pth"

cfg.freeze()After this preparation, we can finally instance the model. We call the model "predictor" since we only make inference predictions. Notice that we have to choose a vocabulary from the ones we put in the "BUILDIN_CLASSIFIER" and "BUILDIN_METADATA_PATH." You could try changing this to obtain different results.

vocabulary = "lvis" # try 'lvis', 'objects365', 'openimages', or 'coco'

predictor = DefaultPredictor(cfg)

metadata = MetadataCatalog.get(BUILDIN_METADATA_PATH[vocabulary])

classifier = BUILDIN_CLASSIFIER[vocabulary]

num_classes = len(metadata.thing_classes)

reset_cls_test(predictor.model, classifier, num_classes)After that, we only need to run the model and visualize the results.

predictions = predictor(im)

metadata = MetadataCatalog.get(BUILDIN_METADATA_PATH["lvis"])

visualizer = Visualizer(

im[:, :, ::-1], metadata=metadata, instance_mode=ColorMode.IMAGE

)

instances = predictions["instances"].to(torch.device("cpu"))

vis_output = visualizer.draw_instance_predictions(predictions=instances)

print(predictions)

vis_output.save("detic_output.jpg")This should print us something similar to this:

{'instances': Instances(num_instances=3, image_height=720, image_width=1280, fields=[pred_boxes: Boxes(tensor([[ 743.0906, 40.1450, 1147.4911, 718.4363],

[ 109.0441, 199.6036, 1133.3624, 716.3141],

[1095.8981, 314.3076, 1279.4323, 717.0710]])), scores: tensor([0.9285, 0.9030, 0.5115]), pred_classes: tensor([0, 0, 0]), pred_masks: tensor([[[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

...,

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False]],

[[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

...,

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False]],

[[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

...,

[False, False, False, ..., False, False, False],

[False, False, False, ..., False, False, False],

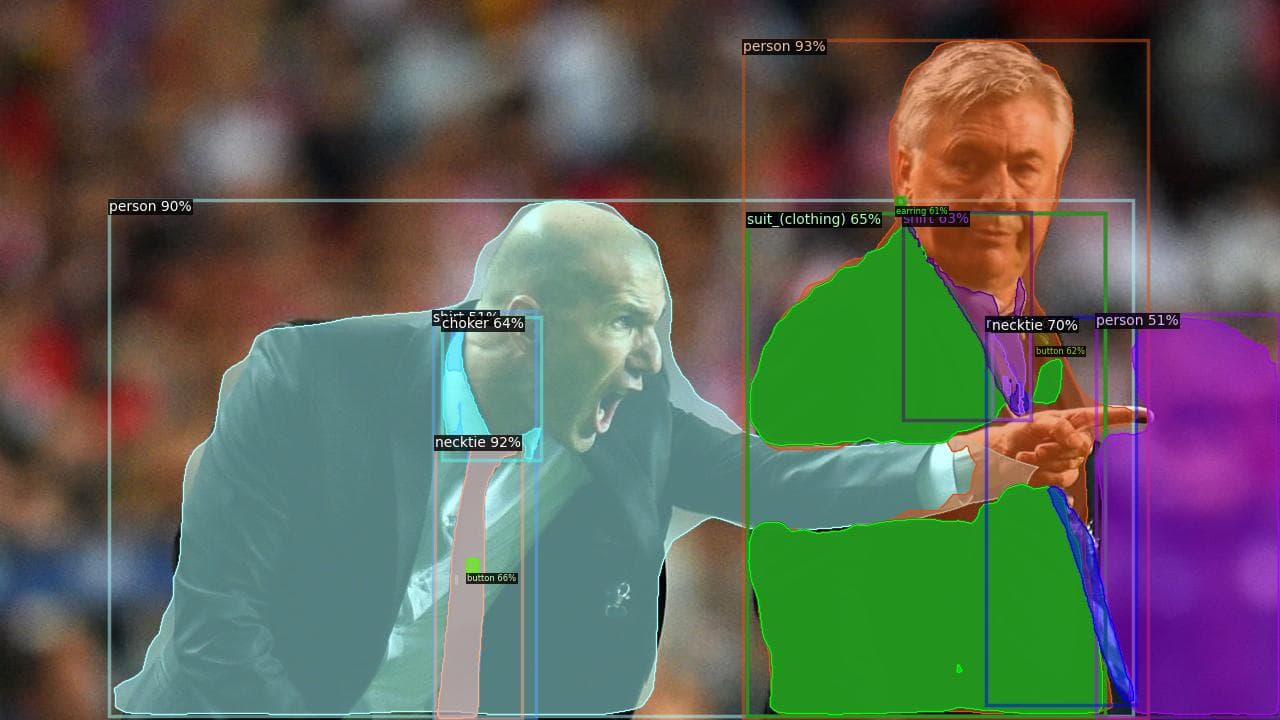

[False, False, False, ..., False, False, False]]])])}And also, it should save an image named "detic_output.jpg", which should look like the image below, depending on the vocabulary you chose.

Here we can see three main differences with YOLO:

- Now, we have color masks apart from the bounding box

- Detic detected some classes that weren't detected by YOLO

- For the objects both models detected, the confidence is higher in the case of Detic

So, what have we learned?

So that's it! That's all it takes to use a generic object detector! Go ahead and try it for your own set of images. Of course, a more custom solution would imply both data handling and training, but this is all you need to have your own simple object detector.

Object detection has become an essential tool for automatization in many areas, from security to medical applications. The idea here was to give you some background and two basic examples to start trying by yourself, and we hope we made it easy enough. The limit of this tool is your imagination.