Enhancing Image Quality with Visual AI: How does it work?

Super-resolution consists of using AI to automatically enhance image quality. Going from a low-resolution image to an upscaled, HD version.

What is super-resolution?

Single image super-resolution is one of the many computer vision tasks that can be done for image enhancing. It consists of going from a *low-resolution image**, to an upscaled or improved version.

Why would someone want to do this? There are many different industries where improving an image's resolution could be of use.

One of them is retail, where the automatic image retouching to enhance their quality leads to an increase of purchase intention, as seen on our retail blog post. But its applications are not limited to product pictures.

This technique could be applied to surveillance, satellite and medical image enhancement, as well as image rendering.

Technical overview

Now, how do we assess the quality of the super-resolution image? How do we account for it being realistic or not? Well, unlike most computer vision problems, where only objective metrics are taken into account, subjective methods are needed for super-resolution. Let's go over the objective ones and keep for last the most interesting one for enhancing image quality.

The two main objective metrics to check for a correct image reconstruction are Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity (SSIM). PSNR consists of the Mean Square Error (MSE) between the pixels from the high-resolution image and the super-resolution one for each color channel.

Basically, similar pixels between the original and the reconstructed image lead to a good PSNR, but there is no perceptual consideration involved. SSIM aims at comparing both images perceptually by considering the difference in luminance, structure and contrast. Therefore, spatially close pixel dependency is taken into account.

Now, how can you really measure if an image looks more realistic to the human eye than another one? By actually involving the human eye. Yes, that is correct. The subjective metric consists of a Mean Opinion Score (MOS). Basically, a set of viewers compare the original image and the reconstructed one and assign them a numeric value or rating. This, of course, is expensive, time consuming and subjective to the viewers but still is the chosen option when comparing state of the art methods.

Enhancing Image Quality: State-of-The-Art Solutions

We have mentioned a few of the applications of single image super-resolution and how to evaluate the results. Let's dive into the different methods that can be used, from a brief historic overview to the current state of the art image quality-enhancing methods.

Before deep learning models, bicubic and Lanczos interpolation or worst, nearest neighbor interpolation, were the way to upscale images. This meant either pixelated or blurry outputs. However, SRCNN appeared in 2014 to save the day.

Using a quite shallow neural network for single image super-resolution (SISR) they were able to achieve a PSNR of 26.9 and a SSIM of 0.710 for BSD100 (4x upscaling), one of the reference datasets for image super-resolution.

A breakthrough for Image Quality-Enhancing

The next breakthrough on image super-resolution came in 2016 when SRResNet and SRGAN were published by Ledig et. al., achieving the state-of-the-art at the time with SRResNet and introducing the mean-opinion-score for super-resolution benchmarks.

With a depth of 16 blocks, SRResNet is a ResNet that aims to minimize de MSE between the predicted super-resolution image and the high-resolution one. This makes this network ideal when evaluated with PSNR or SSIM, key metrics for super-resolution benchmarks. However, this doesn't necessarily mean realistic results.

So, to take this into consideration, Ledig et.al. considered a mean-opinion-score (MOS), which established SRGAN as the state-of-the-art for photo-realistic SR with 4x upscaling.

Generative adversarial networks (GANs) consist of two networks that compete against each other. One of them, called generative network, tries to create an image (or another type of output) as realistic as possible, whereas the other one, named adversarial network, tries to say whether the output is artificial or not.

Basically, the generative network is learning to fool the adversarial one, and the adversarial network is dynamically improving its detection ability. As a consequence, GANs are able to learn in an unsupervised manner and achieve realistic results.

So, how did they achieve this? By employing a ResNet with skip-connections for the generator and not focusing only on MSE for optimization. Instead, the implemented perceptual loss combines an adversarial loss and a content loss.

On one hand, the first one arises from the discriminator network by comparing the super-resolution image with the real one. This leads to more photo-realistic results.

On the other hand, the content loss instead aims to get perceptual similarity instead of a pixel-wise one. How? Well, even though pixel-wise MSE loss gets really good PSNR results, it does not achieve high-frequency content but instead smooth and unrealistic ones. To tackle this problem, a VGG loss was proposed by Ledig et.al. as well as a MSE one, where the VGG loss is calculated by comparing the features of the pre-trained 19 layer VGG network against the original image.

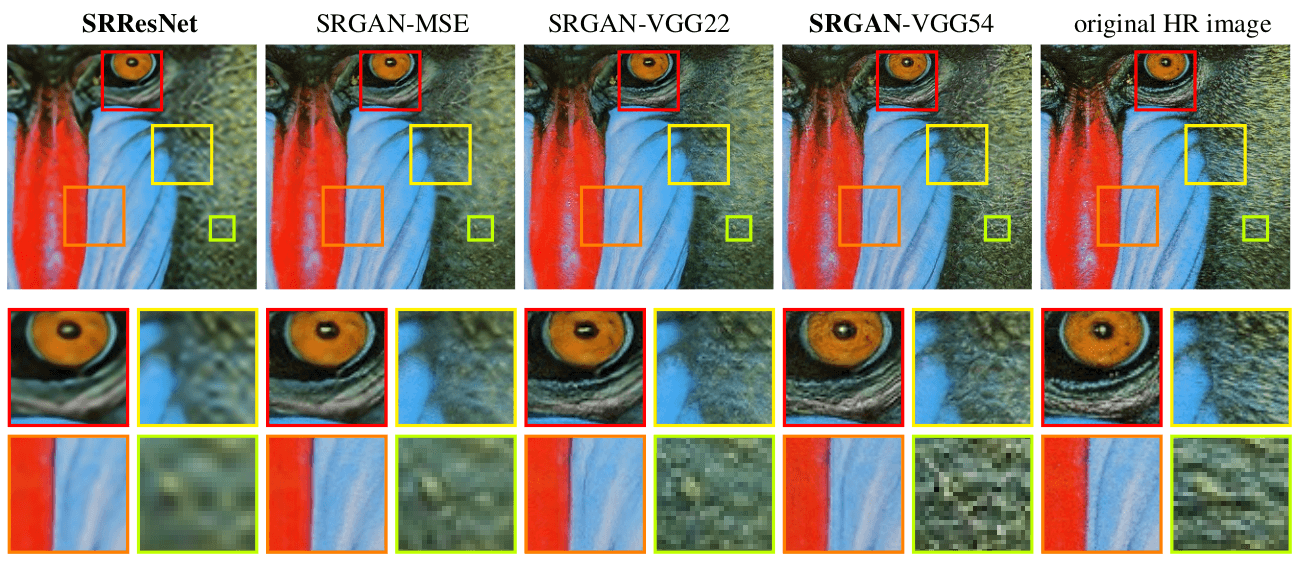

The results achieved with the different variations of SRGAN are shown on the next figure, taken from SRResNet and SRGAN's paper.

In 2018, Wang et.al. improved both the architecture and loss functions from SRGAN and introduced ESRGAN. Regarding the architecture, they removed batch normalization and changed the residual blocks from SRGAN to Residual in Residual Dense Blocks (RRDB).

Also, SRGAN's discriminator predicts the probability of an image being natural, while ESRGAN's discriminator predicts the probability of an image being more realistic than an artificial image.

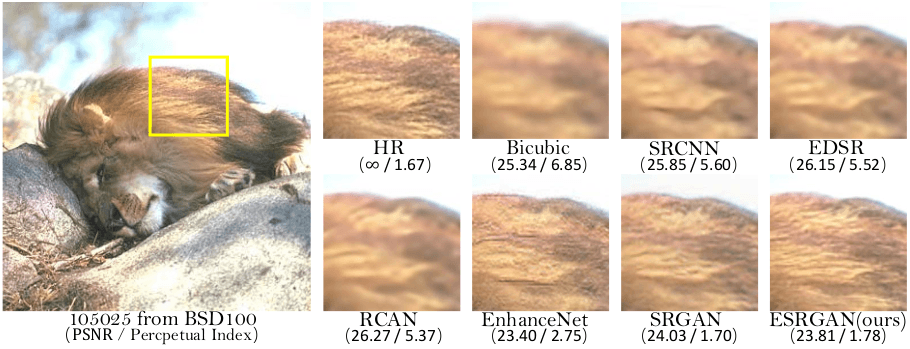

Finally, the perceptual loss is improved by using the features prior to the activation layer. On the following image (taken from ESRGAN's paper), it becomes clear that even though ESRGAN does not lead to the best PSNR, it generates the most realistic output.

Our take on Enhancing Image Quality

All in all, it is fascinating to see the evolution of this computer vision task on both metrics and models. Initially, the goal was to make the super-resolution image as similar as the high-resolution one. And SRCNN and SRResNet excel at this, resulting in higher PSNR and SSIM than SRGAN and ESRGAN on several benchmarks.

However, the shift into realistic results changed the rules. The enhanced image did not necessarily need to be similar to the high-resolution one in a pixel-wise way.

Instead, it needed to look realistic to an actual human being. This leads to a change of paradigm that makes GANs better for the job of enhancing image quality, at least for now...